-

Full Conference Pass (FC)

Full Conference Pass (FC)

-

Full Conference One-Day Pass (1D)

Full Conference One-Day Pass (1D)

Date: Thursday, December 6th

Time: 9:00am - 10:45am

Venue: Hall B5(2) (5F, B Block)

Session Chair(s): Wolfgang Heidrich, King Abdullah University of Science and Technology (KAUST),

A System for Acquiring, Processing, and Rendering Panoramic Light Field Stills for Virtual Reality

Abstract: We present a system for acquiring, processing, and rendering panoramic light field still photography for display in Virtual Reality (VR). We acquire spherical light field datasets with two novel light field camera rigs designed for portable and efficient light field acquisition. We introduce a novel real-time light field reconstruction algorithm that uses a multi-view geometry and a disk-based blending field. We also demonstrate how to use a light field prefiltering operation to project from a high-quality offline reconstruction model into our real-time model while suppressing artifacts. We introduce a practical approach for compressing light fields by modifying the VP9 video codec to provide high quality compression with real-time, random access decompression. We combine these components into a complete light field system offering convenient acquisition, compact file size, and high-quality rendering while generating stereo views at 90Hz on commodity VR hardware. Using our system, we built a freely available light field experience application called Welcome to Light Fields featuring a library of panoramic light field stills for consumer VR which has been downloaded over 15,000 times.

Authors/Presenter(s): Ryan S. Overbeck, Google Inc., United States of America

Daniel Erickson, Google Inc., United States of America

Daniel Evangelakos, Google Inc., United States of America

Matt Pharr, Google Inc., United States of America

Paul Debevec, Google Inc., United States of America

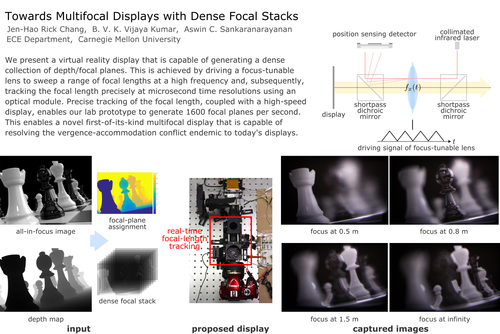

Towards Multifocal Displays with Dense Focal Stacks

Abstract: We present a virtual reality display that is capable of generating a dense collection of depth/focal planes. This is achieved by driving a focus-tunable lens to sweep a range of focal lengths at a high frequency and, subsequently, tracking the focal length precisely at microsecond time resolutions using an optical module. Precise tracking of the focal length, coupled with a high-speed display, enables our lab prototype to generate 1600 focal planes per second. This enables a novel first-of-its-kind virtual reality multifocal display that is capable of resolving the vergence-accommodation conflict endemic to today's displays.

Authors/Presenter(s): Jen-Hao Rick Chang, Carnegie Mellon University, United States of America

Vijayakumar Bhagavatula, Carnegie Mellon University, United States of America

Aswin C. Sankaranarayanan, Carnegie Mellon University, United States of America

Shading Atlas Streaming

Abstract: Streaming high quality rendering for virtual reality applications requires minimizing perceived latency. We introduce Shading Atlas Streaming (SAS), a novel object-space rendering framework suitable for streaming virtual reality content. SAS decouples server-side shading from client-side rendering, allowing the client to perform framerate upsampling and latency compensation autonomously for short periods of time. The shading information created by the server in object space is temporally coherent and can be efficiently compressed using standard MPEG encoding. Our results show that SAS compares favorably to previous methods for remote image-based rendering in terms of image quality and network bandwidth efficiency. SAS allows highly efficient parallel allocation in a virtualized-texture-like memory hierarchy, solving a common efficiency problem of object-space shading. With SAS, untethered virtual reality headsets can benefit from high quality rendering without paying in increased latency.

Authors/Presenter(s): Joerg H. Mueller, Graz University of Technlogy, Austria

Philip Voglreiter, Graz University of Technlogy, Austria

Mark Dokter, Graz University of Technlogy, Austria

Thomas Neff, Graz University of Technlogy, Austria

Mina Makar, Qualcomm Technologies Inc., United States of America

Markus Steinberger, Graz University of Technology, Austria

Dieter Schmalstieg, Graz University of Technology, Qualcomm Technologies Inc., Austria

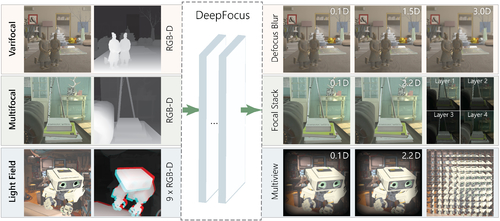

DeepFocus: Learned Image Synthesis for Computational Displays

Abstract: Addressing vergence-accommodation conflict in head-mounted displays (HMDs) requires resolving two interrelated problems. First, the hardware must support viewing sharp imagery over the full accommodation range of the user. Second, HMDs should accurately reproduce retinal defocus blur to correctly drive accommodation. A multitude of accommodation-supporting HMDs have been proposed, with three architectures receiving particular attention: varifocal, multifocal, and light field displays. These designs all extend depth of focus, but rely on computationally expensive rendering and optimization algorithms to reproduce accurate defocus blur (often limiting content complexity and interactive applications). To date, no unified framework has been proposed to support driving these emerging HMDs using commodity content. In this paper, we introduce DeepFocus, a generic, end-to-end convolutional neural network designed to efficiently solve the full range of computational tasks for accommodation-supporting HMDs. This network is demonstrated to accurately synthesize defocus blur, focal stacks, multilayer decompositions, and multiview imagery using only commonly available RGB-D images, enabling real-time, near-correct depictions of retinal blur with a broad set of accommodation-supporting HMDs.

Authors/Presenter(s): Lei Xiao, Facebook Reality Labs, United States of America

Anton Kaplanyan, Facebook Reality Labs, United States of America

Alexander Fix, Facebook Reality Labs, United States of America

Matthew Chapman, Facebook Reality Labs, United States of America

Douglas Lanman, Facebook Reality Labs, United States of America