-

Full Conference Pass (FC)

Full Conference Pass (FC)

-

Full Conference One-Day Pass (1D)

Full Conference One-Day Pass (1D)

Date: Friday, December 7th

Time: 2:15pm - 4:00pm

Venue: Hall B5(1) (5F, B Block)

Session Chair(s): Takaaki Shiratori, Facebook,

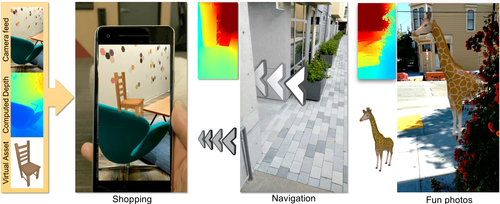

Depth from Motion for Smartphone AR

Abstract: Smartphone augmented reality (AR) has matured from a technology for earlier adopters, available only on select high-end phones, to one that is truly available to the general public. One of the key breakthroughs has been in low-compute methods for six degree of freedom (6DoF) tracking on phones using only the existing hardware (camera and inertial sensors). 6DoF tracking is the cornerstone of smartphone AR allowing virtual content to be precisely locked on top of the real world. However, to really give users the impression of believable AR, one requires mobile depth. Without depth, even simple effects such as a virtual object being correctly occluded by the real-world is impossible. However, requiring a mobile depth sensor would severely restrict the access to such features. In this article, we provide a novel pipeline for mobile depth that supports a wide array of mobile phones, and uses only the existing monocular color sensor. Through several technical contributions, we provide the ability to compute low latency dense depth maps using only a single CPU core of a wide range of (medium-high) mobile phones. We demonstrate the capabilities of our approach on high-level AR applications including real-time navigation and shopping.

Authors/Presenter(s): Julien Valentin, Google, United States of America

Adarsh Kowdle, Google, United States of America

Jonathan Barron, Google, United States of America

Neal Wadhwa, Google, United States of America

Max Dzitsiuk, Google, United States of America

Michael Schoenberg, Google, United States of America

Vivek Verma, Google, United States of America

Ambrus Csaszar, Google, United States of America

Eric Turner, Google, United States of America

Ivan Dryanovski, Google, United States of America

Joao Afonso, Google, United States of America

Jose Pascoal, Google, United States of America

Konstantine Tsotsos, Google, United States of America

Mira Leung, Google, United States of America

Mirko Schmidt, Google, United States of America

Onur Guleryuz, Google, United States of America

Sameh Khamis, Google, United States of America

Vladimir Tankovitch, Google, United States of America

Sean Fanello, Google, United States of America

Shahram Izadi, Google, United States of America

Christoph Rhemann, Google, United States of America

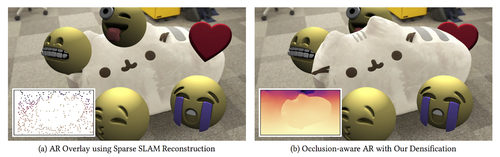

Fast Depth Densification for Occlusion-aware Augmented Reality

Abstract: Current AR systems track sparse geometric features, which limits their ability to interact with the scene, since away from the sparse features the depth is unknown. For this reason most AR effects are pure overlays that can never be occluded by real objects. We present a novel algorithm that propagates sparse depth to every pixel in near realtime. The produced depth maps are spatio-temporally smooth but exhibit sharp discontinuities at depth edges. This enables AR effects that can fully interact and be occluded by the real scene. Our algorithm uses a video and a sparse SLAM reconstruction as input. It starts by estimating soft depth edges from the gradient of optical flow fields. Because optical flow is unreliable near occlusions we compute forward and backward flow fields and fuse the resulting depth edges using a novel reliability measure. We then localize the depth edges by thinning and aligning them with image edges. Finally, we optimize the propagated depth smoothly but encourage discontinuities at the recovered depth edges. We present results for numerous real-world examples and demonstrate the effectiveness for several occlusion-aware AR video effects. To quantitatively evaluate our algorithm we characterize the properties that make depth maps desirable for AR applications, and present novel evaluation metrics that capture how well these are satisfied. Our results compare favorably to a set of competitive baseline algorithms in this context.

Authors/Presenter(s): Aleksander Holynski, University of Washington, United States of America

Johannes Kopf, Facebook, United States of America

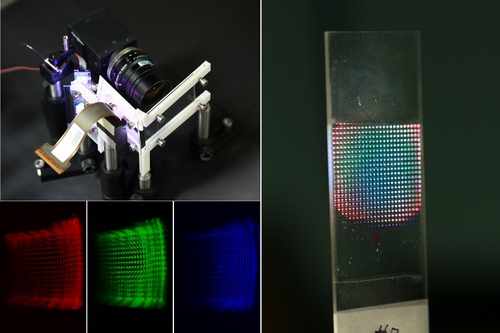

Holographic Near-eye Display with Expanded Eye-box

Abstract: Holographic displays have great potential to realize mixed reality by modulating the wavefront of light in a fundamental manner. As a computational display, holographic displays offer a large degree of freedom, such as focus cue generation and vision correction. However, the limited bandwidth of spatial light modulator imposes an inherent trade-off relationship between the field of view and eye-box size. Thus, we demonstrate the first practical eye-box expansion method for a holographic near-eye display. Instead of providing an intrinsic large exit-pupil, we shift the optical system's exit-pupil to cover the expanded eye-box area with pupil-tracking. For compact implementation, a pupil-shifting holographic optical element (PSHOE) is proposed that can reduce the form factor for exit-pupil shifting. A thorough analysis of the design parameters and display performance are provided. In particular, we provide a comprehensive analysis of the incorporation of the holographic optical element into a holographic display system. The influence of holographic optical elements on the intrinsic exit-pupil and pupil switching is revealed by numerical simulation and Wigner distribution function analysis.

Authors/Presenter(s): Changwon Jang, Seoul National University, South Korea

Kiseung Bang, Seoul National University, South Korea

Gang Li, Seoul National University, South Korea

Byoungho Lee, Seoul National University, South Korea

MIDAS Projection: Markerless and Modelless Dynamic Projection Mapping for Material Representation

Abstract: The visual appearance of an object can be disguised by projecting virtual shading as if overwriting the material. However, conventional projection-mapping methods depend on markers on a target or a model of the target shape, which limits the types of targets and the visual quality. In this paper, we focus on the fact that the shading of a virtual material in a virtual scene is mainly characterized by surface normals of the target, and we attempt to realize markerless and modelless projection mapping for material representation. In order to deal with various targets, including static, dynamic, rigid, soft, and fluid objects, without any interference with visible light, we measure surface normals in the infrared region in real time and project material shading with a novel high-speed texturing algorithm in screen space. Our system achieved 500-fps high-speed projection mapping of a uniform material and a tileable-textured material with millisecond-order latency, and it realized dynamic and flexible material representation for unknown objects. We also demonstrated advanced applications and showed the expressive shading performance of our technique.

Authors/Presenter(s): Leo Miyashita, The University of Tokyo, Japan

Yoshihiro Watanabe, Tokyo Institute of Technology, Japan

Masatoshi Ishikawa, The University of Tokyo, Japan