-

Full Conference Pass (FC)

Full Conference Pass (FC)

-

Full Conference One-Day Pass (1D)

Full Conference One-Day Pass (1D)

Date: Friday, December 7th

Time: 11:00am - 12:45pm

Venue: Hall B5(2) (5F, B Block)

Session Chair(s): Tien-Tsin Wong, The Chinese University of Hong Kong, Hong Kong

Image Smoothing via Unsupervised Learning

Abstract: Image smoothing represents a fundamental component of many disparate computer vision and graphics applications. In this paper, we present a unified unsupervised (label-free) learning framework that facilitates generating flexible and high-quality smoothing effects by directly learning from data using deep convolutional neural networks (CNNs). The heart of the design is the training signal as a novel energy function that includes an edge-preserving regularizer which helps maintain important yet potentially vulnerable image structures, and a spatially-adaptive Lp flattening criterion which imposes different forms of regularization onto different image regions for better smoothing quality. We implement a diverse set of image smoothing solutions employing the unified framework targeting various applications such as, image abstraction, pencil sketching, detail enhancement, texture removal and content-aware image manipulation, and obtain results comparable with or better than previous methods. Moreover, our method is extremely fast with a modern GPU (e.g, 200 fps for 1280x720 images).

Authors/Presenter(s): Qingnan Fan, Shandong University, Beijing Film Academy, China

Jiaolong Yang, Microsoft Research Asia, China

David Wipf, Microsoft Research Asia, United States of America

Baoquan Chen, Peking University, Shandong University, China

Xin Tong, Microsoft Research Asia, China

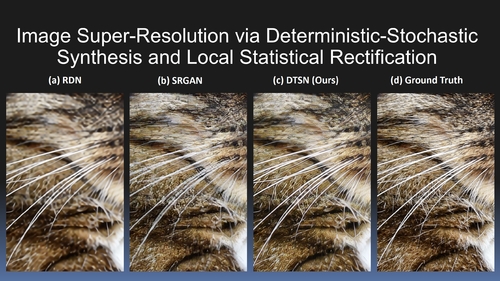

Image Super-Resolution via Deterministic-Stochastic Synthesis and Local Statistical Rectification

Abstract: Single image superresolution has been a popular research topic in the last two decades and has recently received a new wave of interest due to deep neural networks. In this paper, we approach this problem from a different perspective. With respect to a downsampled low resolution image, we model a high resolution image as a combination of two components, a deterministic component and a stochastic component. The deterministic component can be recovered from the low-frequency signals in the downsampled image. The stochastic component, on the other hand, contains the signals that have little correlation with the low resolution image. We adopt two complementary methods for generating these two components. While generative adversarial networks are used for the stochastic component, deterministic component reconstruction is formulated as a regression problem solved using deep neural networks. Since the deterministic component exhibits clearer local orientations, we design novel loss functions tailored for such properties for training the deep regression network. These two methods are first applied to the entire input image to produce two distinct high-resolution images. Afterwards, these two images are fused together using another deep neural network that also performs local statistical rectification, which tries to make the local statistics of the fused image match the same local statistics of the groundtruth image. Quantitative results and a user study indicate that the proposed method outperforms existing state-of-the-art algorithms with a clear margin.

Authors/Presenter(s): Weifeng Ge, The University of Hong Kong, Hong Kong

Bingchen Gong, The University of Hong Kong, Hong Kong

Yizhou Yu, The University of Hong Kong, Hong Kong

Two-stage Sketch Colorization

Abstract: Sketch colorization, or line art colorization, is a research field with considerable market demands. Different from photo colorization which adds colors to textured grayscale input, sketch colorization is more challenging: we have to generate proper color, texture, and gradient from abstract sketch lines. In this paper, we propose a semi-automatic learning-based framework to colorize sketch images with proper texture and gradient. Our framework consists of two stages. In the first stage, our interactive system guesses color regions and splash many different colors on the sketch image to obtain a color draft. In the second stage, the interactive system detects the mistakes of the draft, and then correct the wrong color to achieve a refined painting. The two-stage design resolves artifacts like water-color blurring, color distortion, and dull textures, comparing to existing methods. We build an interactive software based on our model where users can iteratively edit and refine the colorization. Then we evaluated our framework through a multi-dimensional user study, which proves that our method outperforms the state-of-art techniques and industrial applications in several aspects in terms of visual quality, precision on user controls, user experience and so on.

Authors/Presenter(s): LvMin Zhang, Soochow University, PaintsTransfer, China

Chengze Li, The Chinese University of Hong Kong, China

Tien-Tsin Wong, The Chinese University of Hong Kong, China

Yi Ji, Soochow University, China

ChunPing Liu, Soochow University, China

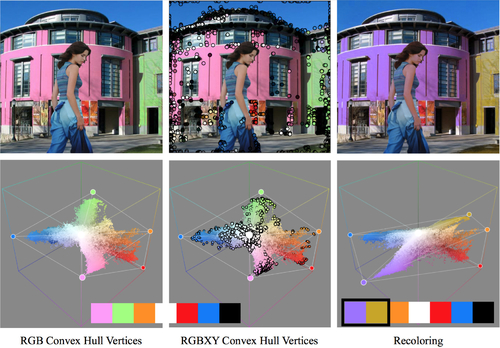

Efficient palette-based decomposition and recoloring of images via RGBXY-space geometry

Abstract: We introduce an extremely scalable and efficient yet simple palette-based image decomposition algorithm. Given an RGB image and set of palette colors, our algorithm decomposes the image into a set of additive mixing layers, each of which corresponds to a palette color applied with varying weight. Our approach is based on the geometry of images in RGBXY-space. This new geometric approach is orders of magnitude more efficient than previous work and requires no numerical optimization. We provide an implementation of the algorithm in 48 lines of Python code. We demonstrate a real-time layer decomposition tool in which users can interactively edit the palette to adjust the layers. After preprocessing, our algorithm can decompose 6 MP images into layers in 20 milliseconds.

Authors/Presenter(s): Jianchao Tan, George Mason University, United States of America

Jose Echevarria, Adobe Research, United States of America

Yotam Gingold, George Mason University, ygingold@gmu.edu, United States of America