-

Full Conference Pass (FC)

Full Conference Pass (FC)

-

Full Conference One-Day Pass (1D)

Full Conference One-Day Pass (1D)

Date: Friday, December 7th

Time: 9:00am - 10:45am

Venue: Hall B5(1) (5F, B Block)

Session Chair(s): Hao (Richard) Zhang, Simon Fraser University,

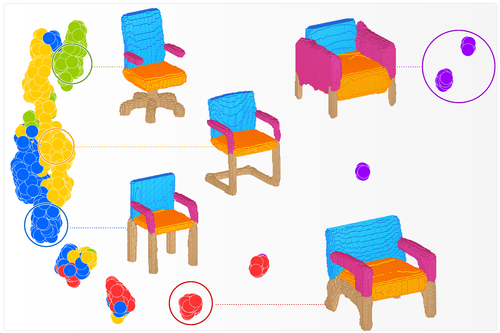

Global-to-Local Generative Model for 3D Shapes

Abstract: The presented method takes a global-to-local (G2L) approach. An adversarial network (GAN) is built first to construct the overall structure of the shape, segmented and labeled into parts. A novel conditional auto-encoder (AE) is then augmented to act as a part-level refiner. The GAN, associated with additional local discriminators and quality losses, synthesizes a voxel-based model, and assigns the voxels with part labels that are represented in separate channels. The AE is trained to amend the initial synthesis of the parts, yielding more plausible part geometries. We also introduce new means to measure and evaluate the performance of an adversarial generative model. We demonstrate that our global-to-local generative model produces significantly better results than a plain three-dimensional GAN, in terms of both their shape variety and the distribution with respect to the training data. Nevertheless, the quality of the generated shapes is still rather low. However, our G2L approach introduces a conceptual advancement in the generative modeling of man-made shapes.

Authors/Presenter(s): Hao Wang, Shenzhen University, China

Nadav Schor, Tel Aviv University, Israel

Ruizhen Hu, Shenzhen University, China

Haibin Huang, Face++, United States of America

Daniel Cohen-Or, Shenzhen University, Tel Aviv University, Israel

Hui Huang, Shenzhen University, China

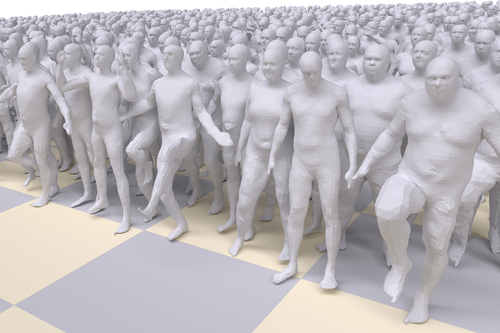

Multi-chart Generative Surface Modeling

Abstract: This paper introduces a 3D shape generative model based on deep neural networks. A new image-like (i.e. tensor) data representation for genus-zero 3D shapes is devised. It is based on the observation that complicated shapes can be well represented by multiple parameterizations (charts), each focusing on a different part of the shape. The new tensor data representation is used as input to Generative Adversarial Networks for the task of 3D shape generation. The 3D shape tensor representation is based on a multi-chart structure that enjoys a shape covering property and scale-translation rigidity. Scale-translation rigidity facilitates high quality 3D shape learning and guarantees unique reconstruction. The multi-chart structure uses as input a dataset of 3D shapes (with arbitrary connectivity) and a sparse correspondence between them. The output of our algorithm is a generative model that learns the shape distribution and is able to generate novel shapes, interpolate shapes, and explore the generated shape space. The effectiveness of the method is demonstrated for the task of anatomic shape generation including human body and bone (teeth) shape generation.

Authors/Presenter(s): Heli Ben-Hamu, Weizmann Institute of Science, Israel

Haggai Maron, Weizmann Institute of Science, Israel

Itay Kezurer, Weizmann Institute of Science, Israel

Gal Avineri, Weizmann Institute of Science, Israel

Yaron Lipman, Weizmann Institute of Science, Israel

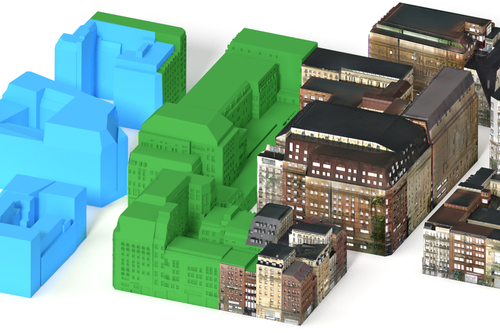

FrankenGAN: Guided Detail Synthesis for Building Mass-models using Style Synchronized GANs

Abstract: Coarse building mass models are now routinely generated at scales ranging from individual buildings to whole cities. Such models can be abstracted from raw measurements, generated procedurally, or created manually. However, these models typically lack any meaningful geometric or texture details, making them unsuitable for direct display. We introduce the problem of automatically and realistically decorating such models by adding semantically consistent geometric details and textures. Building on the recent success of generative adversarial networks (GANs), we propose FrankenGAN, a cascade of GANs that creates plausible details across multiple scales over large neighborhoods. The various GANs are synchronized to produce consistent style distributions over buildings and neighborhoods. We provide the user with direct control over the variability of the output. We allow him/her to interactively specify the style via images and manipulate style-adapted sliders to control style variability. We test our system on several large-scale examples. The generated outputs are qualitatively evaluated via a set of perceptual studies and are found to be realistic, semantically plausible, and consistent in style.

Authors/Presenter(s): Tom Kelly, UCL, United Kingdom

Paul Guerrero, UCL, United Kingdom

Anthony Steed, UCL, United Kingdom

Peter Wonka, KAUST, Saudi Arabia

Niloy J. Mitra, UCL, United Kingdom

Automatic Unpaired Shape Deformation Transfer

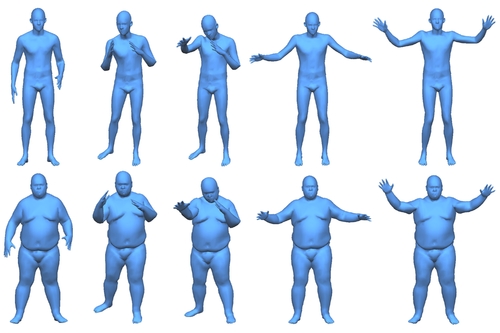

Abstract: Transferring deformation from a source shape to a target shape is a very useful technique in computer graphics. State-of-the-art deformation transfer methods require either point-wise correspondences between source and target shapes, or pairs of deformed source and target shapes with corresponding deformations. However, in most cases, such correspondences are not available and cannot be reliably established using an automatic algorithm. Therefore, substantial user effort is needed to label the correspondences or to obtain and specify such shape sets. In this work, we propose a novel approach to automatic deformation transfer between two unpaired shape sets without correspondences. 3D deformation is represented in a high-dimensional space. To obtain a more compact and effective representation, two convolutional variational autoencoders are learned to encode source and target shapes to their latent spaces. We exploit a Generative Adversarial Network (GAN) to map deformed source shapes to deformed target shapes, both in the latent spaces, which ensures the obtained shapes from the mapping are indistinguishable from the target shapes. This is still an under-constrained problem, so we further utilize a reverse mapping from target shapes to source shapes and incorporate cycle consistency loss, i.e. applying both mappings should reverse to the input shape. This VAE-Cycle GAN (VC-GAN) architecture is used to build a reliable mapping between shape spaces. Finally, a similarity constraint is employed to ensure the mapping is consistent with visual similarity, achieved by learning a similarity neural network that takes the embedding vectors from the source and target latent spaces and predicts the light field distance between the corresponding shapes. Experimental results show that our fully automatic method is able to obtain high-quality deformation transfer results with unpaired data sets, comparable or better than existing methods where strict correspondences are required.

Authors/Presenter(s): Lin Gao, Insititute of Computing Technology Chinese Academy of Sciences, China

Jie Yang, Insititute of Computing Technology Chinese Academy of Sciences, University of Chinese Academy of Sciences, China

Yi-Ling Qiao, Insititute of Computing Technology Chinese Academy of Sciences, University of Chinese Academy of Sciences, China

Yu-Kun Lai, Cardiff University, United Kingdom

Paul L. Rosin, Cardiff University, United Kingdom

Weiwei Xu, Zhejiang University, China

Shihong Xia, Insititute of Computing Technology Chinese Academy of Sciences, China

The Shape Space of 3D Botanical Tree Models

Abstract: We propose an algorithm for generating novel 3D tree model variations from existing ones via geometric and structural blending. Our approach is to treat botanical trees as elements of a tree-shape space equipped with a proper metric that quantifies geometric and structural deformations. Geodesics, or shortest paths under the metric, between two points in the tree-shape space correspond to optimal deformations that align one tree onto another, including the possibility of expanding, adding, or removing branches and parts. Central to our approach is a mechanism for computing correspondences between trees that have different structures and a different number of branches. The ability to compute geodesics and their lengths enables us to compute continuous blending between botanical trees, which, in turn, facilitates statistical analysis, such as the computation of averages of tree structures. We show a variety of 3D tree models generated with our approach from 3D trees exhibiting complex geometric and structural differences. We also demonstrate the application of the framework in reflection symmetry analysis and symmetrization of botanical trees.

Authors/Presenter(s): Guan Wang, Tongji University, China

Hamid Laga, Murdoch University, The Phenomics and Bioinformatics Research Centre University of South Australia, Australia

Ning Xie, University of Electronic Science and Technology of China, China

Jinyuan Jia, Tongji University, China

Hedi Tabia, Paris Seine University, France