-

Full Conference Pass (FC)

Full Conference Pass (FC)

-

Full Conference One-Day Pass (1D)

Full Conference One-Day Pass (1D)

Date: Thursday, December 6th

Time: 4:15pm - 6:00pm

Venue: Hall D5 (5F, D Block)

Session Chair(s): Carol O'Sullivan, Trinity College, Dublin,

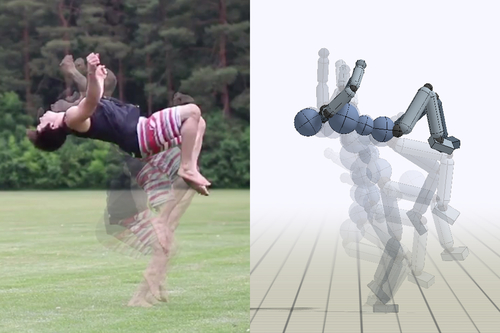

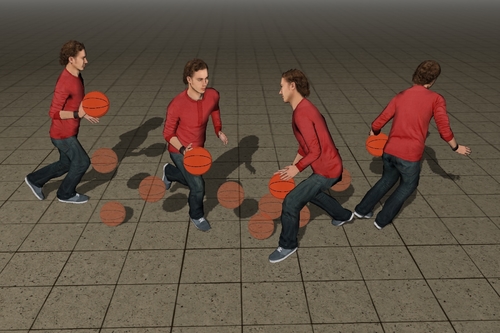

SFV: Reinforcement Learning of Physical Skills from Videos

Abstract: Data-driven character animation based on motion capture can produce highly naturalistic behaviors and, when combined with physics simulation, can provide for natural procedural responses to physical perturbations, environmental changes, and morphological discrepancies. Motion capture remains the most popular source of motion data, but collecting mocap data typically requires heavily instrumented environments and actors. In this paper, we propose a method that enables physically simulated characters to learn skills from videos (SFV). Our approach, based on deep pose estimation and deep reinforcement learning, allows data-driven animation to leverage the abundance of publicly available video clips from the web, such as those from YouTube. This has the potential to enable fast and easy design of character controllers simply by querying for video recordings of the desired behavior. The resulting controllers are robust to perturbations, can be adapted to new settings, can perform basic object interactions, and can be retargeted to new morphologies via reinforcement learning. We further demonstrate that our method can predict potential human motions from still images, by forward simulation of learned controllers initialized from the observed pose. Our framework is able to learn a broad range of dynamic skills, including locomotion, acrobatics, and martial arts.

Authors/Presenter(s): Xue Bin Peng, University of California, Berkeley, United States of America

Angjoo Kanazawa, University of California, Berkeley, United States of America

Jitendra Malik, University of California, Berkeley, United States of America

Pieter Abbeel, University of California, Berkeley, United States of America

Sergey Levine, University of California, Berkeley, United States of America

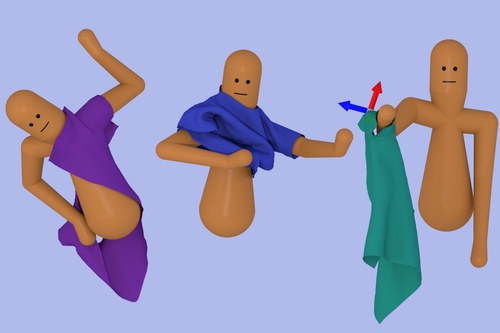

Learning to Dress: Synthesizing Human Dressing Motion via Deep Reinforcement Learning

Abstract: Creating animation of a character putting on clothing is challenging due to the complex interactions between the character and the simulated garment. We take a model-free deep reinforcement learning (deepRL) approach to automatically discovering robust dressing control policies represented by neural networks. While deepRL has demonstrated several successes in learning complex motor skills, the data-demanding nature of the learning algorithms is at odds with the computationally costly cloth simulation required by the dressing task. This paper is the first to demonstrate that, with an appropriately designed input state space and a reward function, it is possible to incorporate cloth simulation in the deepRL framework to learn a robust dressing control policy. We introduce a salient representation of haptic information to guide the dressing process and utilize it in the reward function to provide learning signals during training. In order to learn a prolonged sequence of motion involving a diverse set of manipulation skills, such as grasping the edge of the shirt or pulling on a sleeve, we find it necessary to separate the dressing task into several subtasks and learn a control policy for each subtask. We introduce a policy sequencing algorithm that matches the distribution of output states from one task to the input distribution for the next task in the sequence. We have used this approach to produce character controllers for several dressing tasks: putting on a t-shirt, putting on a jacket, and robot-assisted dressing of a sleeve.

Authors/Presenter(s): Alexander Clegg, The Georgia Institute of Technology, United States of America

Wenhao Yu, The Georgia Institute of Technology, United States of America

Jie Tan, Google Brain, United States of America

Greg Turk, The Georgia Institute of Technology, United States of America

C. Karen Liu, The Georgia Institute of Technology, United States of America

Interactive Character Animation by Learning Multi-Objective Control

Abstract: We present an approach that learns to act from raw motion data for interactive character animation. Our motion generator takes a continuous stream of control inputs and generates the character’s motion in an online manner. The key insight is modeling rich connections between a multitude of control objectives and a large repertoire of actions. The model is trained using Recurrent Neural Network conditioned to deal with spatiotemporal constraints and structural variabilities in human motion. We also present a new data augmentation method that allows the model to be learned even from a small to moderate amount of training data. The learning process is fully automatic if it learns the motion of a single character, and requires minimal user intervention if it has to deal with props and interaction between multiple characters.

Authors/Presenter(s): Kyungho Lee, Seoul National University, South Korea

Seyoung Lee, Seoul National University, South Korea

Jehee Lee, Seoul National University, South Korea

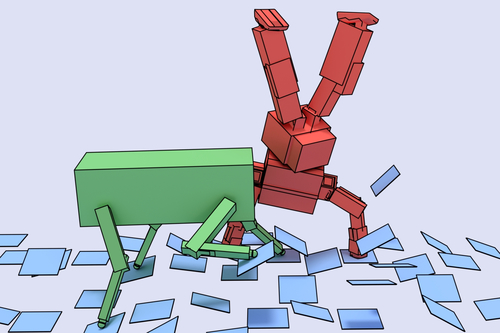

2PAC: Two Point Attractors for Center Of Mass Trajectories in Multi Contact Scenarios

Abstract: Synthesizing motions for legged characters in arbitrary environments is a long-standing problem that has recently received a lot of attention from the computer graphics community. We tackle this problem with a procedural approach that is generic, fully automatic and independent from motion capture data. The main contribution of this paper is a point-mass-model-based method to synthesize Center Of Mass trajectories. These trajectories are then used to generate the whole-body motion of the character. The use of a point mass model results in physically inconsistent motions and joint limit violations when mapped back to a fullbody motion. We mitigate these issues through the use of a novel formulation of the kinematic constraints that allows us to generate a quasi-static Center Of Mass trajectory, in a way that is both user-friendly and computationally efficient. We also show that the quasi-static constraint can be relaxed to generate motions usable for computer animation, at the cost of a moderate violation of the dynamic constraints. Our method was integrated in our open-source contact planner and tested with different scenarios - some never addressed before- featuring legged characters performing non-gaited motions in cluttered environments. The computational efficiency of our trajectory generation algorithm (under one ms to compute one second of trajectory) enables us to synthesize motions in a few seconds, one order of magnitude faster than state-of-the-art methods. Although our method is empirically able to synthesize collision-free motions, the formal handling of environmental constraints is not part of the proposed method, and left for future work.

Authors/Presenter(s): Steve Tonneau, LAAS-CNRS, France

Pierre Fernbach, LAAS-CNRS, France

Andrea Del Prete, Max Planck Institute for Intelligent Systems, Germany

Julien Pettre, Inria, France

Nicolas Mansard, LAAS-CNRS, France