-

Full Conference Pass (FC)

Full Conference Pass (FC)

-

Full Conference One-Day Pass (1D)

Full Conference One-Day Pass (1D)

Date: Friday, December 7th

Time: 11:00am - 12:45pm

Venue: Hall D5 (5F, D Block)

Session Chair(s): Shuang Zhao, University of California, Irvine,

Practical SVBRDF Acquisition of 3D Objects with Unstructured Flash Photography

Abstract: Capturing spatially-varying bidirectional reflectance distribution functions (SVBRDFs) of 3D objects with just a single, hand-held camera (such as an off-the-shelf smartphone or a DSLR camera) is a difficult, open problem. Previous works are either limited to planar geometry, or rely on previously scanned 3D geometry, thus limiting their practicality. There are several technical challenges that need to be overcome: First, the built-in flash of a camera is almost colocated with the lens, and at a fixed position; this severely hampers sampling procedures in the light-view space. Moreover, the near-field flash lights the object partially and unevenly. In terms of geometry, existing multiview stereo techniques assume diffuse reflectance only, which leads to overly smoothed 3D reconstructions, as we show in this paper. We present a simple yet powerful framework that removes the need for expensive, dedicated hardware, enabling practical acquisition of SVBRDF information from real-world, 3D objects with a single, off-the-shelf camera with a built-in flash. In addition, by removing the diffuse reflection assumption and leveraging instead such SVBRDF information, our method outputs high-quality 3D geometry reconstructions, including more accurate high-frequency details than state-of-the-art multiview stereo techniques. We formulate the joint reconstruction of SVBRDFs, shading normals, and 3D geometry as a multi-stage, iterative inverse-rendering reconstruction pipeline. Our method is also directly applicable to any existing multiview 3D reconstruction technique. We present results of captured objects with complex geometry and reflectance; we also validate our method numerically against other existing approaches that rely on dedicated hardware, additional sources of information, or both.

Authors/Presenter(s): Giljoo Nam, KAIST, South Korea

Joo Ho Lee, KAIST, South Korea

Diego Gutierrez, Universidad de Zaragoza, Spain

Min H. Kim, KAIST, South Korea

Simultaneous Acquisition of Polarimetric SVBRDF and Normals

Abstract: Capturing appearance often requires dense sampling in light-view space, which is often achieved in specialized, expensive hardware setups. With the aim of realizing a compact acquisition setup without multiple angular samples of light and view, we sought to leverage an alternative optical property of light, polarization. To this end, we capture a set of polarimetric images with linear polarizers in front of a single projector and camera to obtain the appearance and normals of real-world objects. We encountered two technical challenges: First, no complete polarimetric BRDF model is available for modeling mixed polarization of both specular and diffuse reflection. Second, existing polarization-based inverse rendering methods are not applicable to a single local illumination setup since they are formulated with the assumption of spherical illumination. To this end, we first present a complete polarimetric BRDF (pBRDF) model that can define mixed polarization of both specular and diffuse reflection. Second, by leveraging our pBRDF model, we propose a novel inverse-rendering method with joint optimization of pBRDF and normals to capture spatially-varying material appearance: per-material specular properties (including the refractive index, specular roughness and specular coefficient), per-pixel diffuse albedo and normals. Our method can solve the severely ill-posed inverse-rendering problem by carefully accounting for the physical relationship between polarimetric appearance and geometric properties. We demonstrate how our method overcomes limited sampling in light-view space for inverse rendering by means of polarization.

Authors/Presenter(s): Seung-Hwan Baek, KAIST, South Korea

Daniel S. Jeon, KAIST, South Korea

Xin Tong, Microsoft Research Asia, China

Min H. Kim, KAIST, South Korea

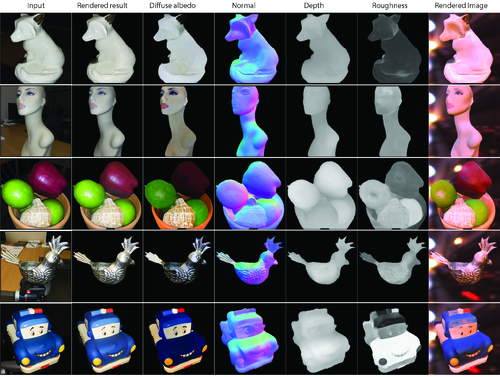

Learning to Reconstruct Shape and Spatially-Varying Reflectance from a Single Image

Abstract: Reconstructing shape and reflectance properties from images is a highly under-constrained problem, and has previously been addressed by using specialized hardware to capture calibrated data or by assuming known (or highly constrained) shape or reflectance. In contrast, we demonstrate that we can recover non-Lambertian, spatially-varying BRDFs and complex geometry belonging to any arbitrary shape class, from a single RGB image captured under a combination of unknown environment illumination and flash lighting. We achieve this by training a deep neural network to regress shape and reflectance from the image. Our network is able to address this problem because of three novel contributions: first, we build a large-scale dataset of procedurally generated shapes and real-world complex SVBRDFs that approximate real world appearance well. Second, single image inverse rendering requires reasoning at multiple scales, and we propose a cascade network structure that allows this in a tractable manner. Finally, we incorporate an in-network rendering layer that aids the reconstruction task by handling global illumination effects that are important for real-world scenes. Together, these contributions allow us to tackle the entire inverse rendering problem in a holistic manner and produce state-of-the-art results on both synthetic and real data.

Authors/Presenter(s): Zhengqin Li, UC San Diego, United States of America

Zexiang Xu, UC San Diego, United States of America

Ravi Ramamoorthi, UC San Diego, United States of America

Kalyan Sunkavalli, Adobe, United States of America

Manmohan Chandraker, UC San Diego, United States of America

Relighting Humans: Occlusion-Aware Inverse Rendering for Full-Body Human Images

Abstract: Relighting of human images has various applications in image synthesis. For relighting, we must infer albedo, shape, and illumination from a human portrait. Previous techniques rely on human faces for this inference, on the basis of spherical harmonics (SH) lighting. However, because they often ignore light occlusion, inferred shapes are biased and relit images are unnaturally bright particularly at hollowed regions such as armpits, crotches, or garment wrinkles. This paper introduces the first attempt to directly infer light occlusion in the SH formulation. Based on supervised learning using convolutional neural networks (CNNs), we infer not only an albedo map, illumination but also a light transport map that encodes occlusion as nine SH coefficients per pixel. The main difficulty in this inference is the lack of training datasets compared to unlimited variations of human portraits. Surprisingly, geometric information including occlusion can be inferred plausibly even with a small dataset of synthesized human figures, by carefully preparing the dataset so that the CNNs can exploit the data coherency. Our method accomplishes more realistic relighting than the occlusion-ignored formulation.

Authors/Presenter(s): Yoshihiro Kanamori, University of Tsukuba, Japan

Yuki Endo, University of Tsukuba, Toyohashi University of Technology, Japan