-

Full Conference Pass (FC)

Full Conference Pass (FC)

-

Full Conference One-Day Pass (1D)

Full Conference One-Day Pass (1D)

Date: Wednesday, December 5th

Time: 4:15pm - 6:00pm

Venue: Hall D5 (5F, D Block)

Session Chair(s): Karen Liu, Georgia Institute of Technology, United States of America

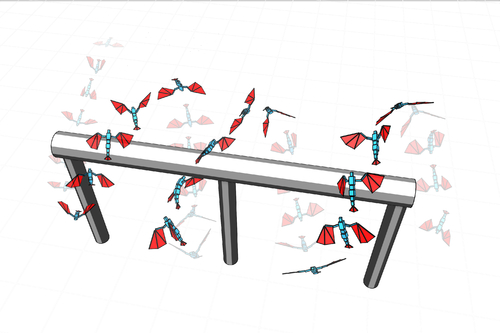

Aerobatics Control of Flying Creatures via Self-Regulated Learning

Abstract: Flying creatures in animated films often perform highly dynamic aerobatic maneuvers, which require their extreme of exercise capacity and skillful control. Designing physics-based controllers (a.k.a., control policies) for aerobatic maneuvers is very challenging because dynamic states remain in unstable equilibrium most of the time during aerobatics. Recently, Deep Reinforcement Learning (DRL) has shown its potential in constructing physics-based controllers. In this paper, we present a new concept, Self-Regulated Learning (SRL), which is combined with DRL to address the aerobatics control problem. The key idea of SRL is to allow the agent to take control over its own learning using an additional self-regulation policy. The policy allows the agent to regulate its goals according to the capability of the current control policy. The control and self-regulation policies are learned jointly along the progress of learning. Self-regulated learning can be viewed as building its own curriculum and seeking compromise on the goals. The effectiveness of our method is demonstrated with physically-simulated creatures performing aerobatic skills of sharp turning, rapid winding, rolling, soaring, and diving.

Authors/Presenter(s): Jungdam Won, Seoul National University, South Korea

Jungnam Park, Seoul National University, South Korea

Jehee Lee, Seoul National University, South Korea

Flycon: Real-time Environment-independent Multi-view Human Pose Estimation with Aerial Vehicles

Abstract: We propose a real-time method for the infrastructure-free estimation of articulated human motion. The approach leverages a swarm of cameraequipped flying robots and jointly optimizes the swarm’s and skeletal states, which include the 3D joint positions and a set of bones. Our method allows to track the motion of human subjects, for example an athlete, over long time horizons and long distances, in challenging settings and at large scale, where fixed infrastructure approaches are not applicable. The proposed algorithm uses active infra-red markers, runs in real-time and accurately estimates robot and human pose parameters online without the need for accurately calibrated or stationary mounted cameras. Our method i) estimates a global coordinate frame for the MAV swarm, ii) jointly optimizes the human pose and relative camera positions, and iii) estimates the length of the human bones. The entire swarm is then controlled via a model predictive controller to maximize visibility of the subject from multiple viewpoints even under fast motion such as jumping or jogging. We demonstrate our method in a number of difficult scenarios including capture of long locomotion sequences at the scale of a triplex gym, in non-planar terrain, while climbing and in outdoor scenarios.

Authors/Presenter(s): Tobias Nägeli, ETH Zürich, Switzerland

Samuel Oberholzer, ETH Zurich, Switzerland

Silvan Plüss, ETH Zurich, Switzerland

Javier Alonso-Mora, TU Delft, Netherlands

Otmar Hilliges, ETH Zurich, Switzerland

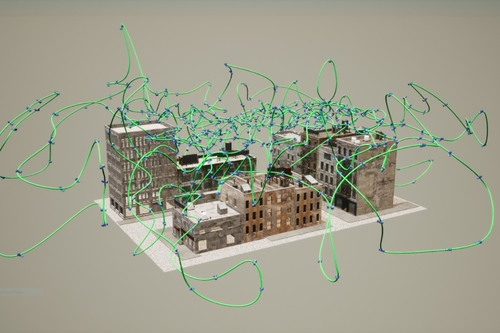

Aerial Path Planning for Urban Scene Reconstruction: A Continuous Optimization Method and Benchmark

Abstract: Small unmanned aerial vehicles (UAVs) are ideal capturing devices for high-resolution urban 3D reconstructions using multi-view stereo. Nevertheless, practical considerations such as safety usually mean that access to the scan target is often only available for a short amount of time, especially in urban environments. It therefore becomes crucial to perform both view and path planning to minimize flight time while ensuring complete and accurate reconstructions. In this work, we address the challenge of automatic view and path planning for UAV-based aerial imaging with the goal of urban reconstruction from multi-view stereo. To this end, we develop a novel continuous optimization approach using heuristics for multi-view stereo reconstruction quality and apply it to the problem of path planning. To evaluate our method, we introduce and describe a detailed benchmark dataset for UAV path planning in urban environments which can also be used to evaluate future research efforts on this topic. Using this dataset and both synthetic and real data, we demonstrate survey-grade urban reconstructions with ground resolutions of 1 cm or better on large areas (30,000,m^2).

Authors/Presenter(s): Neil Smith, King Abdullah University of Science & Technology, Saudi Arabia

Nils Moehrle, TU Darmstadt, Germany

Michael Goesele, TU Darmstadt, Germany

Wolfgang Heidrich, King Abdullah University of Science & Technology, Saudi Arabia

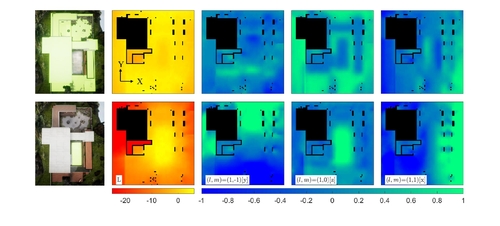

Ambient sound propagation

Abstract: Ambient sounds arise from a massive superposition of chaotic events distributed over a large area or volume, such as waves breaking on a beach or rain hitting the ground. The directionality and loudness of these sounds as they propagate in complex 3D scenes vary with listener location, providing cues that distinguish indoors from outdoors and reveal portals and occluders. We show that ambient sources can be approximated using an ideal notion of spatio-temporal incoherence and develop a lightweight technique to capture their global propagation effects. Our approach precomputes a single FDTD simulation using a sustained source signal whose phase is randomized over frequency and source extent. It then extracts a spherical harmonic encoding of the resulting steady-state distribution of power over direction and position in the scene using an efficient flux density formulation. The resulting parameter fields are smooth and compressible, requiring only a few MB of memory per extended source. We also present a fast binaural rendering technique that exploits phase incoherence to reduce filtering cost.

Authors/Presenter(s): Zechen Zhang, Cornell University, United States of America

Nikunj Raghuvanshi, Microsoft Corporation, United States of America

John Snyder, Microsoft Corporation, United States of America

Steve Marschner, Cornell University, United States of America